If you’re a React developer, you’ve likely spun up a new React app countless times.

In recent years, you’ve probably been using a project generator. You might be running npx create-react-app my-app, npx create-next-app@latest, or npm init gatsby.

In this article, we’ll outline the set of steps that take place immediately after this command, starting when you open your new project in an IDE, access ./src, and create your first directory.

Though creating a directory is one of the simplest things a developer does, it’s not as simple as you may think. The directories you create and the libraries you install—the toolsets and developer experience you put in place in your first minutes with this new project—can have a huge impact on how flexible and easy to maintain the application will be.

Strategies & libraries you can employ when scaffolding your React app include:

- Barrel files, aliased directories, and underscored directory names

- Internationalization libraries

- Typescript

- Accessibility

- Component libraries and theming solutions

- ESLint

- Unit testing

- Prettier

- CI/CD

Alias[ed] Investigations: Barrel Files, Aliased Directories & Underscores

Underscore Routinely-Accessed Directories

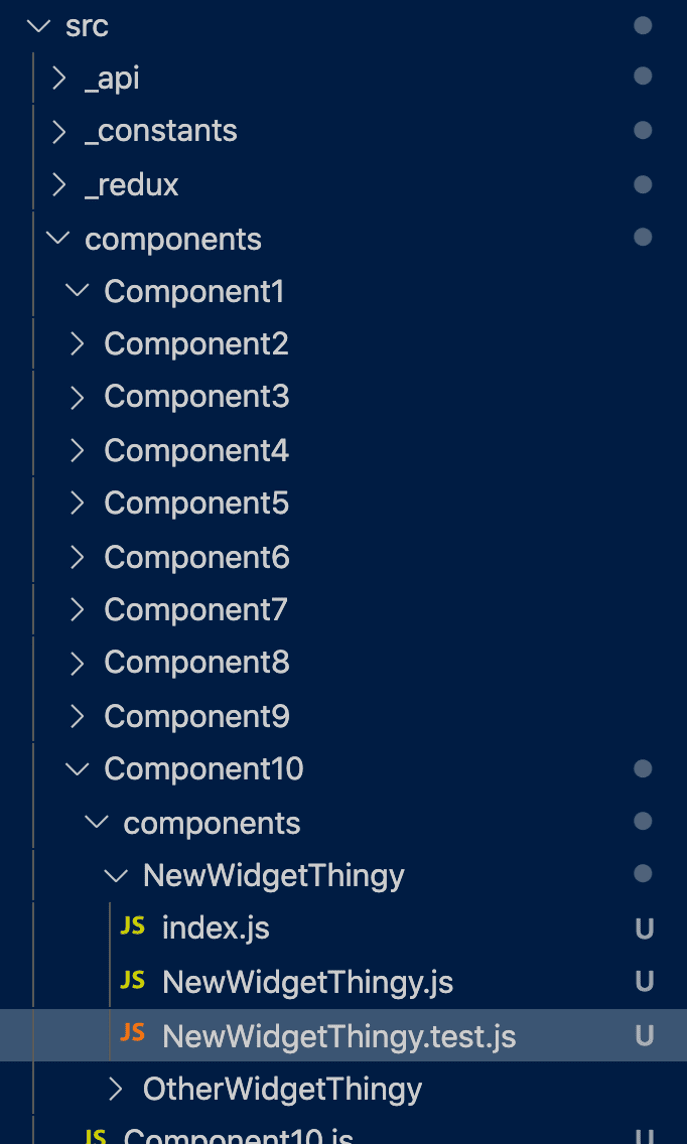

Applications that grow usually do so for a reason: they are useful and necessary. If you work on or manage a growing application, you will eventually encounter the time suck we call IDE file structure scroll.

This occurs when working in a directory of a directory of a directory, for example, a ./src/components, with 15 or 20 components. At one point, you need to access a file in your api folder and a file in your redux folder, where state is managed.

If these directories are all named purely alphabetically, you will have to scroll up, open the api directory to access file one, and then scroll far down to open the redux directory and access file two.

Though this won’t seem like a huge hassle the first few times you encounter it, keep in mind that api and redux are a special type of directory. They contain files that are accessed on a regular basis by many parts of the application.

Consequently, during development, you will need to access them frequently. The time spent scrolling up to api and down to redux adds up to minutes which — over the lifetime of the application — will add up to hours.

api and redux are also much less likely to contain hundreds of directories or files than components. Even with their folders open in the IDE, they have a less significant effect on IDE file structure scroll than components.

They are, as we mentioned above, a special type of directory and both contain files that are accessed on a regular basis by many parts of the application.

With this in mind, it makes sense to anchor them in one place within your directory structure—both to make them simple to find and to make them accessible with one scroll.

The easy solution to this problem is to prefix these directories with an underscore (_). The underscore preface will pin them to the top of the parent directory, providing a consistent placement for all high-use directories.

You can get more elaborate with your prefixes, adding numbers or letters to enforce more specific ordering, but often an underscore is enough.

As you can see in the image above, the new directory structure is more organized and cleaner. The commonly-used and universal resources are all in a consistent location at the top of the parent directory, so we know easily where to access them.

Aliased Directories

Let’s say you’re a few days in with your new React app when you decide to make the above change and prefix some directories with an underscore.

If you navigate the file structure via command line or your operating system’s file inspector and simply rename the redux directory to _redux, every reference to ./src/redux/reduxSlice.js in your application will break.

If you’re using an IDE like Visual Studio Code, it will probably recommend that those paths be rewritten, which is great.

But there is a more transparent and durable way to make your application automatically support the renaming of these top-level directories using aliased directories.

An alias is a secondary or symbolic reference to an expression, symbol, or path. When you configure an aliased directory in a React app, you tell Webpack what absolute path that alias represents. You are then free to use the alias instead of the absolute relative path in your imports.

// Absolute, relative path in an import

import MyNewWidget from '../components/MyNewWidget/MyNewWidget'

// Import from aliased directory

import MyNewWidget from '@components/MyNewWidget/MyNewWidget'

You can now move this file to a different directory without breaking the import of MyNewWidget. You can also rename ./src/components entirely.

When you update the aliased directory configuration, all imports from @components will continue to work as before.

As we mentioned above, Visual Studio Code and some other IDEs will offer to fix import paths when you move a directory, but aliased directories are built into the application and not dependent upon the IDE to fix your mistakes.

If you’re using Craco, you can configure aliased directories with a ./craco.config.js file:

const path = require('path')

module.exports = {

webpack: {

alias: {

'@components': path.resolve(__dirname, 'src/components/'),

'@local-redux': path.resolve(__dirname, 'src/_redux/'),

'@local-api': path.resolve(__dirname, 'src/_api/'),

'@constants': path.resolve(__dirname, 'src/_constants/'),

'@assets': path.resolve(__dirname, 'src/_assets/'),

},

},

}

Note that in the example above, not every directory and child directory in the application has been aliased. Typically only the top-level directories in the codebase are aliased, and nothing below.

A few of the aliases are prefaced with local-. This is a trick we use to avoid conflicts with external npm libraries. For example, our project has a directory named redux. This can cause imports of the Redux library (import { createStore } from ‘redux’) to conflict with your imports from your local @redux directory.

In general, any alias with a name similar to a commonly used React library we preface with local-. You can use whatever affix (or suffix) you like to prevent these conflicts.

One tedious aspect of aliased directories is that you may need to configure and update them in a few different places, depending on your configuration. Typescript may need to be configured as well as Webpack:

{

"compilerOptions": {

"baseUrl": ".", // Must be specified to use "paths".

"paths": {

"@components": ["src/components/"], // Relative to "baseUrl"

"@local-redux": ["src/_redux/"],

"@local-api": ["src/_api/"],

"@constants": ["src/_constants"],

"@assets": ["src/_assets"],

}

}

}

Finally, Jest and React Testing Library execute in a separate runtime. If you are getting errors about imports when running unit tests, add the alias declarations to the jest config in ./package.json:

"jest": {

"moduleNameMapper": {

"^@components(.*)$": "<rootDir>/src/components/$1",

"^@local-redux(.*)$": "<rootDir>/src/_redux/$1",

"^@local-api(.*)$": "<rootDir>/src/_api/$1",

"^@constants/(.*)$": "<rootDir>/src/_constants/$1",

"^@assets/(.*)$": "<rootDir>/src/_assets/$1",

},

},

Barrel Files

Now that you have the tools to create a much more useable, durable directory structure that will reduce developer hassles and more easily adapt to changes in the codebase, let’s take a look at a few more examples. Below is the path to MyNewWidget from the example above:

import MyNewWidget from '@components/MyNewWidget/MyNewWidget'Let’s say you want to import 5 widgets from @components. With no additional changes, those imports would look like so:

import MyNewWidget from '@components/MyNewWidget/MyNewWidget'

import MyNewWidgetBig from '@components/MyNewWidgetBig/MyNewWidgetBig'

import MyNewWidgetSmall from '@components/MyNewWidgetSmall/MyNewWidgetSmall'

import MyNewWidgetPretty from '@components/MyNewWidgetPretty/MyNewWidgetPretty'

import MyNewWidgetUgly from '@components/MyNewWidgetUgly/MyNewWidgetUgly'

A lot of React developers are accustomed to Visual Studio Code, and VS Code will often automatically suggest these import paths for you.

But that’s still a lot of lines of imports. Additionally, each path has to go all the way to the Javascript file in which the component is defined.

Not pretty. This project could do with some barrel files.

There are many articles about barrel files on the Internet, but most of them don’t start from first principles. We’ve already seen that in React you import modules where they will be used. Conversely, before a module can be used, it must be exported.

If you have written a React component before you have exported a module:

import React from 'react'

const MyComponent = () => {

return <div>This is my component!</div>

}

export default MyComponent

Without that export default at the bottom of the file, your component can’t be used anywhere else in the app. Barrel files are files that standardize and consolidate the exporting of modules.

There are just about as many novel barrel file patterns as there are blog posts about barrel files. We’ll outline one simple pattern and explain how it works together with aliased directories.

First, every component we create has an index.js file in it, a barrel file for one or a few components, that exports the main component in the directory as well as any child components that might be imported elsewhere.

Most components only need one export. If a child component doesn’t need to be imported elsewhere, it doesn’t need to be exported:

My export for Component10:

export { default } from "./Component10";For a directory like ./src/components, which has a lot of child elements at the same level that are likely to be referenced in multiple places in the app, it’s worthwhile to create an additional barrel file at ./src/components/index.js.

Note that because we have an index.js with an export in each individual component directory, my paths no longer have to go all the way to ./Component10/Component10.js:

export { default as Component1 } from './Component1'

export { default as Component2 } from './Component2'

export { default as Component3 } from './Component3'

export { default as Component4 } from './Component4'

...

export { default as Component10 } from './Component10'

Also note that we’re renaming and exporting the default export from each component directory. If there were other, non-default components those exports would look different:

export { MyOtherComponent, MyNewComponent, MyOldComponent } from './Component1'What we have effectively done, in ./src/components/index.js, is turn @components into a local component library. Now, when we want to import 5 components from ./src/components, instead of 5 individual default imports:

import MyNewWidget from '@components/MyNewWidget/MyNewWidget'

import MyNewWidgetBig from '@components/MyNewWidgetBig/MyNewWidgetBig'

import MyNewWidgetSmall from '@components/MyNewWidgetSmall/MyNewWidgetSmall'

import MyNewWidgetPretty from '@components/MyNewWidgetPretty/MyNewWidgetPretty'

import MyNewWidgetUgly from '@components/MyNewWidgetUgly/MyNewWidgetUgly'

We can have a single import path to @components with 5 named imports:

import {

MyNewWidget,

MyNewWidgetBig,

MyNewWidgetSmall,

MyNewWidgetPretty,

MyNewWidgetUgly

} from '@components'

With that, we’ve created a more durable and legible import pattern that can be leveraged all over the application.

One helpful tool is a template extension to automatically generate new components that follow these patterns.

This is especially helpful, in large projects with multiple developers, for keeping the export patterns consistent across barrel files.

You can also use extensions that automatically generate barrel files but be sure that the pattern the extension uses will produce the kinds of imports you want for your application.

“Fake It, Till You Make It:” Internalization Before Translation

If you’ve worked as a freelance front-end developer for any amount of time, you’ve likely had this experience:

- Client contracts you to develop an app

- You develop and launch the app

- The app is a huge success

- Client requests that another language be added to the app

Unlike almost everything else in a React app, natural language strings are notoriously hard to search for or locate using an automated tool. It takes very little effort to implement and utilize internationalization library under the hood; it takes an immense amount of effort to implement one later.

We recommend that you implement an internationalization library as soon as you start. Don’t even ask your client. She (and your team) will thank you later.

There are very simple and very complex libraries in this category. And there are heavyweight solutions, like react-intl, that ship utilities for not only tagging language strings and switching between languages, but also for importing and exporting language string sets for manual or automated translation.

These libraries all work in a similar fashion. You declare or initialize the library, sometimes wrapping the rest of your application in a provider, and feed in the set of language strings.

When your user toggles a language select, you update a lang or locale prop on that provider.

import i18n from '@pureartisan/simple-i18n'

// @pureartisan/simple-i18n init

i18n.init({

languages: languageDefinitions,

locale: 'en'

})

// react-intl provider

import {IntlProvider, FormattedMessage} from 'react-intl'

return (

<IntlProvider messages={messages} locale="en">

<h1>

<FormattedMessage

id="myMessage"

defaultMessage="Welcome to our site!"

description="Welcome message in site header"

/>

</h1>

</IntlProvider>

)

We’ve mentioned that react-intl will export your full collection of language strings for you. This library is well-suited to large projects with manual or automated translation workflows.

With a workflow of this sort, you would export the language strings from your application to a JSON file, hand them off to a team of translation talent, and get back a series of translated JSON files, one for each language.

In cases like this, the description prop is especially important as it gives the translator what might be important context for translation.

Let’s say your client is securing this translation talent as you work, but you will be developing the app for weeks or perhaps months before those translators get ahold of your file of strings.

What happens if you need to test or demonstrate the locale switching before that?

There are automated solutions that will take an AI’s best guess at how long a given string might be in your most lengthy locale (usually German). But we’ve also created Node.js scripts to generate fake translation files:

const fs = require('fs')

const LOCALES = require('./locales.json')

const SOURCE = 'src/lang/extr/en.json'

fs.readFile(SOURCE, 'utf8', (err, data) => {

console.log(`Reading source file at ${SOURCE}.`)

if (err) {

return console.log('Error reading source file.', err);

}

LOCALES.forEach((locale) => {

if (locale.id !== 'en') {

const result = data.replace(/",/g, `-${locale.id}",`);

fs.writeFile(`src/lang/transl/${locale.id}.json`, result, 'utf8', (writeErr) => {

if (writeErr) {

console.log(err);

} else {

console.log(`Translations for ${locale.id} written successfully.`)

}

});

}

})

return true

})

This simple script loops through each locale and generates a new file with fake translations. The fake translations consist of the default string (in this case, in en) and the new locale.

Until official translations are provided, these files can be imported back into the application to test locale switching.

Typescript or Not Typescript: That Is the Question

For most developers, their relationship with Typescript is either love or hate. Typescript maintainers call it a “superset” of Javascript.

Typescript was developed because Javascript is a loosely typed language. You do not have to declare the data types of variables, and you can intentionally or accidentally change data types during execution.

This can result in unexpected behavior. For example, 1 == “1”, a comparison of the number 1 with the string “1”, is true.

Those who have been working with Javascript for a long time understand this and anticipate when to be cautious about data type and when we can safely leverage loose typing.

They know to force type when checking equivalency. For example, 1 === “1” is false. And they (probably) have some habits that involve on-the-fly type coercion.

Typescript, by contrast, enforces static typing on Javascript. Variables are declared with typing, and that typing is enforced.

const myVar: string = 'Typescript!'There are many advantages to strict typing. For any project under active development by a team of developers, anything more complex than a simple single page app or a Gatsby site, Typescript is a good idea.

But for your weekend project or your sister-in-law’s knitting blog, it might not be worth the overhead. Weigh Typescript’s advantages over the learning curve and implementation challenges.

The adoption of Typescript often goes hand in hand with the jettisoning of PropTypes prop checking from the project.

It’s important to remember that Typescript and PropTypes do different things. Typescript checks types within your code during composition. PropTypes checks the type of any prop fed into your component at runtime.

Typescript is therefore not the best tool to verify that data you receive at runtime is of the proper type or format. External data fetched from an API at runtime may still benefit from old-fashioned PropTypes checking.

[ES]Lint Traps: Is Stricter Always Better?

ESLint is the linting solution in place in most React applications. ESLint offers a lot of rules. Some pertain to JS conventions, others to formatting and layout.

There are a variety of rulesets that can be installed and extended within your ESLint config. Some are very strict, some relatively lenient.

What you must decide is how strict or lenient to be, and how this is informed or affected by your overall business and management strategies.

Typically, there are two ways to manage onboarding risk for a development team:

The first is to lock down your applications in various ways: with strict typing, ESLint rulesets, and other kinds of scaffolding strategies.

The second is to create a culture of documentation, collaboration, and low-stakes learning among your developers.

If a strict set of linting rules is justified by your security or reliability needs, or those of your clients, then by all means use the tools available to your organization. But we recommend that you first consider the long-term benefits of offering young talent a more lenient development experience and a supportive work culture.

ESLint and Typescript should never be a substitute for proper mentorship and a positive work environment.

“But There’s Nothing To Test!” Jest and React Testing Library

It’s frustrating to stop and add unit tests when you’ve just started building your application. Especially for layout components that receive few or no props.

A way around this is to add a very simple test file to every new component you create: a test that simply renders the component. This test file can be generated from a template as the rest of the component is scaffolded.

You may have noticed that the Create React App generator creates a test file in the root directory:

import { render, screen } from '@testing-library/react';

import App from './App';

test('renders learn react link', () => {

render(<App />);

const linkElement = screen.getByText(/learn react/i);

expect(linkElement).toBeInTheDocument();

});

// What we recommend that you add to your template is even simpler:

import { render, screen } from '@testing-library/react';

import ComponentName from './ComponentName';

test('renders ComponentName', () => {

render(<ComponentName />);

});

You might think that simply rendering the component doesn’t test anything. And it is true that there are no expect() statements in the above snippet. Jest alone will provide basic testing of return values for utilities and custom hooks, and these tests should be put in place concurrent with function and hook development.

But for components, you can catch a lot of errors in your application logic and JSX by simply rendering the component when unit tests are run. Oftentimes we don’t even know what functionality will be added to a component when scaffolding out the application, and it doesn’t make sense yet to test for language strings, images, styling, or responses to click events.

Instead, simply be consistent. Implement a component template, and configure it to create a test file which automatically renders the component.

And when someone on the team circles back to add test coverage, the files and rendering will already be in place.

Mirror Mirror On the Wall, Who’s the Prettiest of Them All?

If you’ve been working as a developer long enough, you remember the days when code style standards were enforced manually, in code reviews, and referenced in extensive documentation.

Fortunately, most modern IDEs now support formatting plugins. For Javascript projects, the most common plugin is Prettier.

Implementing Prettier is simple: everyone on the team installs the Prettier plugin on their IDE, you agree on your formatting preferences, and you add Prettier configuration to ./package.json.

Prettier does something different than Typescript or ESLint, and in fact if you’re not careful Prettier’s Format on Save can conflict with some ESLint rules, reintroducing the issue as soon as you save the file.

But instating and uniformly using a Prettier configuration will help your team to avoid introducing unmodified but reformatted lines into your git commits.

What is “A11y” Anyway?

It takes years of automated and manual testing—not to mention training—to build skills in Web accessibility (commonly abbreviated as A11y).

There are rudimentary aspects like alt tags, aria-label s, and color contrast issues that are easily checked with automated tools. But these automated checks can’t teach a nuanced application of semantic HTML5 elements, or how to manually test for keyboard traps.

For this reason, it’s difficult to reach a state where your entire front-end team and development teams are fully qualified to conduct an audit for WCAG level AA accessibility.

What you can do is teach your developers to run an automated test every time they update an interface. Pa11y is an excellent tool that can be run locally.

Chrome’s Lighthouse also includes an A11y audit. These tools won’t catch everything, but they will rule out common A11y issues and save time that can be allocated to manual A11y audits.

One Script to Bring Them All and in the [Pipeline] Bind Them

If your organization’s git repository provider offers a CI/CD pipeline, use it to run the build script and execute automated checks exposed by the tools introduced above.

Unit tests, ESLint, accessibility, and Prettier checks can be run for each pull request. Translation files can be exported, and fake translation files generated, before an Express server is stood up for testing.

Get these automated checks in place as soon as possible so your developers can get used to the process: you don’t want to be stuck troubleshooting the 342 ESLint and React Testing Library failures blocking urgent pull requests two days before your site goes live.

There’s an added benefit to automating these checks on pull requests. When Jenkins is enforcing whatever coding standards you have chosen to employ, you also free up manual code reviews for higher-level discussion (increasing mentorship and improving that “supportive work culture” we mentioned above).

In Conclusion

This article outlines basic scaffolding strategies (some as simple as naming a directory) that you can use to keep technical debt low and help your application grow with your development team and organization over time.

These are only a few of the strategies AIM consultants might recommend for your unique use cases and business needs.

At AIM Consulting, our application development approach is team-centric and holistic, from architecture and design to modernizing software development processes and custom application development.

Our experts can help strategize and resolve issues with architecture, security, and buggy performance — and clear a stacked backlog.

AIM Consultants work with your team to reach all of your current and future application development targets faster, more efficiently, and at the highest quality.

Need Help With Application Development?

We are technology consulting experts & subject-matter thought leaders who have come together to form a consulting community that delivers unparalleled value to our client partners.